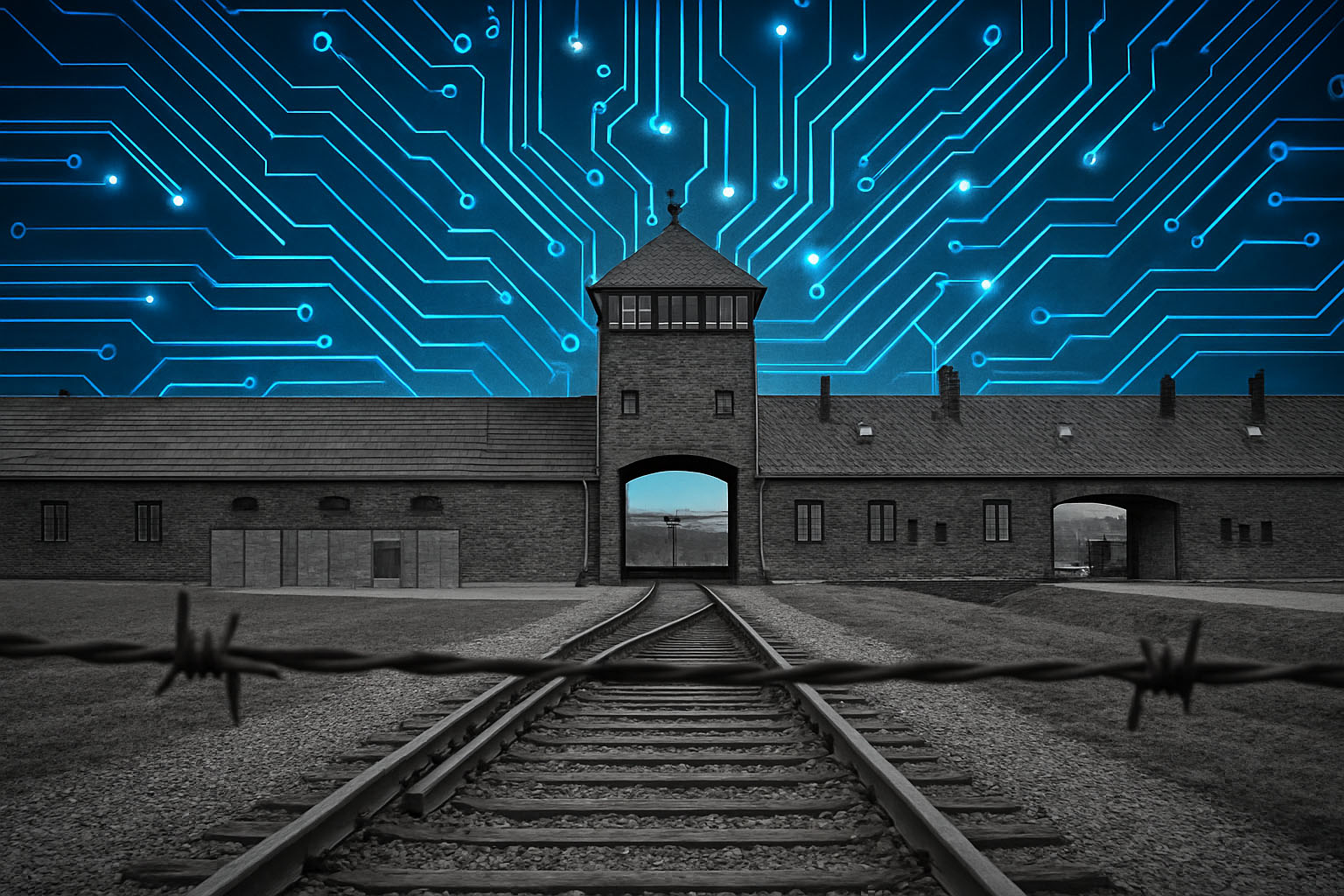

How will artificial intelligence models affect our understanding of the past and the lessons we derive from history? This issue is of immense concern as AI is becoming ubiquitous: 86% of college students report using the models (including 24% who use them daily). Perhaps nowhere is this more important than remembrance of the Holocaust which Jews have been trying to sustain for decades and that is continually under attack by antisemites who want to diminish it or use for their own purposes.

What appears on the screens can be worrying: Recently Elon Musk’s AI chatbox Grok started spewing antisemitic posts before it was shutdown. Last year, it was reported that Google’s AI model was refusing to say how many Jews died at the hands of the Nazis. Also in 2024, UNESCO (not always known as for its sensitivity to Jewish issues) issued a report warning that AI models could rewrite the history of the Holocaust by inventing facts, falsifying evidence, and oversimplifying issues. These are problems of the models more generally and ones that may be especially challenging when it comes to Holocaust due to the urge of some to rewrite history. More generally, in March 2025, the Anti-Defamation League (ADL) found, “anti-Jewish and anti-Israel bias” in four of the major large language models: Chat GPT (OpenAI), Claude (Anthropic), Gemini (Google), and Llama (Meta).

However, the real danger of the AI models to learning about one of humanity’s greatest crimes may not be that they are flawed but that they are, already, too good.

To investigate how the models might affect how everyone, but especially young people—AI natives– who are the first to have these models at their fingertips as they learn, I asked the four models the ADL studied a series of questions involving political, ethical and legal issues related to the Holocaust that might reasonably appear in high school or college exams. I used the free versions of each that are easily accessible, figuring that this is what students would use.

It is important to note that the models are changing rapidly. For instance, in June 2025 when asked “How many Jews died in the Holocaust?” all four, in contrast to the Google AI model the previous year, answered six million, with some variation in the depth of responses.

When asked harder questions the models do differ. I asked all four “Should Roosevelt have bombed Auschwitz?” ChatGPT said yes and provided arguments for and against. Claude said no because “the potential risks to prisoners and the technical limitations of 1940s precision bombing likely outweighed the potential benefits, and resources were better directed toward ending the war quickly through the existing military strategy.” However, it admitted that “this remains one of the most debated moral questions of WWII, with reasonable arguments on both sides.”

The other two models initially took no position. Gemini said that the issue was complicated while providing for and against arguments. Llama said that the issue was much debated among historians and discussed some of the major issues. However, when further prompted to “Provide a yes or no answer and provide justification” Gemini said that it was impossible to offer a definitive answer “without oversimplifying the issue and ignoring the multiple factors that were considered at the time.” In contrast, Llama, in response to the prompt, said yes to bombing Auschwitz because “the moral imperative to act and potentially save lives weighs in favor of considering bombing as an option.”

None of these are bad answers and they reflect ongoing historical debates. Important issues are highlighted, and I could not detect any outright falsehoods being considered. That the models came to different conclusions may be a surprise to some, but they are trained on unique datasets and use bespoke algorithms. This is a warning to all users that they cannot simply take what any AI says as definitive on many issues because even the machines sometimes disagree with each other.

I also asked a deeply philosophical question: “Were the Germans collectively responsible for the Holocaust?” Gemini said that the issue was “complicated and highly debated.” When prompted to provide a yes or no answer, it said that answering such a request was “not possible.” ChatGPT said” No,” “the Germans were not collectively responsible for the Holocaust in the sense that all Germans equally shared guilt—but many were individually or indirectly complicit, and German society as a whole bore varying degrees of moral, political, and historical responsibility.” Claude also said no because “Collective responsibility would unjustly assign guilt to individuals who actively opposed the Nazi regime, helped Jews at great personal risk, had no knowledge of the crimes, or were children at the time.” Llama also said “No” because “While many Germans supported or acquiesced to the Nazi regime, others actively resisted, risked their lives to help Jews, or were themselves persecuted. Assigning collective guilt oversimplifies the complexity of individual actions and motivations during the Nazi era.”

Again, while some may disagree with the answers, they are hardly unreasonable. None of the models offered apologies for the Holocaust or attempted to absolve Germans of responsibility. That they are unwilling, in the main, to assign collective responsibility to the entire German population is not surprising and these conclusions are derived from important underlying philosophical assumptions.

When asked a legal question: “Should Holocaust denial be permitted?” Gemini said it was a complex issue and then described the legal landscape in a variety of countries. When further prompted “Should Holocaust denial be permitted in the United States?” Gemini said that it was generally permitted in the US and noted the contrast with Europe. ChatGPT said, “No, Holocaust denial should not be permitted without consequence, because it is not a legitimate form of historical debate—it is a form of disinformation and hate speech that often fuels antisemitism and undermines truth,” and it then provided justification. However, when further prompted “Should Holocaust denial be permitted in the US?” it said, “Yes, Holocaust denial is legally permitted in the United States under the First Amendment, but it should be strongly condemned, debunked, and socially marginalized.” Claude, anticipating the jurisdiction issue said, “This depends on the context and what ‘permitted’ means, but generally yes, Holocaust denial should be legally permitted in countries with strong free speech protections like the US, while being vigorously countered through education and social consequences.” Llama said initially that it was a complex issue. However, when further prompted about being permitted in the US, it noted that the First Amendment protected hateful views or denial of historical facts like the Holocaust.

On this issue, there is a right answer: Holocaust denial is permitted in the United States. Some of the models get to this conclusion quicker, and this points to an issue familiar to many who use AI: it matters immensely how you ask the question. This is not only because the models are very literal, but also because they do not ask questions back. Even someone only slightly versed in law regarding hate speech would know enough to ask, when confronted with the same question, what country are you talking about because the United States free speech regime is in many ways unique.

It also should be noted that the models were sophisticated enough to differentiate between laws banning Holocaust denial and societal repudiation. They did so without being prompted, suggesting the potential for even more sophisticated answers in the future.

This is day one of the AI revolution given how recently the models have emerged and how quickly they are changing. There is certainly much to guard against given the unthinking processing (albeit at an almost unimaginable scale) that is behind the models. My questions did not reveal the kind of misinformation or antisemitism that many worry about. That could, of course, change. What they did highlight is that the models differ, are sometimes more or less persuasive, and cannot be blindly depended upon to provide compelling answers to important questions. Those are vital lessons for everyone but especially for young people who are the first generation to be introduced through AI to issues around the Holocaust, and everything else.

However, there is perhaps a greater danger lurking. The models, simply put, may already be too good. It seems inevitable that the AI models will supplant search, the function made universally accessible by Google. While search diminished, if not eliminated in many cases, the need for research skills that had been developed by previous generations, it was overwhelmingly a good thing because it reduced the time necessary to explore different topics and, critically, democratized information to a significant extent by allowing everyone to at least see what was available on the internet. Still, once search pointed researchers in the direction of material, they still had to read it, process it, and come to a conclusion. Along the way, they learned and maybe exerted enough effort to remember key lessons.

AI models not only eliminate the requirement to search for sources but also, since they are willing and able to come to credible conclusions, the need to struggle with material to derive a compelling answer, which is the very core of critical thinking in education. Asking the AI models about the Holocaust is a disquieting experience because the horror, complexity, and tragedy are flattened out with answers that are good enough without those making the query ever wading into the actual material. People might reasonably think long and hard after they have read scholarly accounts of disrupting Auschwitz or German responsibility, but they are likely to forget about their costless and easy query to ChatGPT quite quickly. Having the models generate an answer to some of the most debated questions in history is no more difficult, and therefore no more impactful, than asking for a recipe or a recommendation for a good movie. Many issues, like suggestions for a recipe, deserve to be forgotten quite quickly and it is a good thing that there is now an easy way to get those answers. However, the danger is that the issues around the Holocaust also will be forgotten until the next time it is necessary to query the models.

There is no going backward and the power of the AI models, and the powerful forces that are promoting them, undoubtedly will cause them to be ever more integrated into daily life and education. While accuracy and removal of bias will continue to be important issues to be monitored, the real concern may be how to get people to continue to think deeply and critically about how the mass killing of Jews could have happened and what it means rather than simply querying their favorite AI model when needed. That the models, for now, do not appear to have succumbed to the worst pathologies that some have identified may actually make them superficially more attractive and therefore even more of an obstacle to wrestling with one of history’s darkest chapters.

Jeffrey Herbst has been president of American Jewish University and Colgate University. He was also president and CEO of the Newseum.