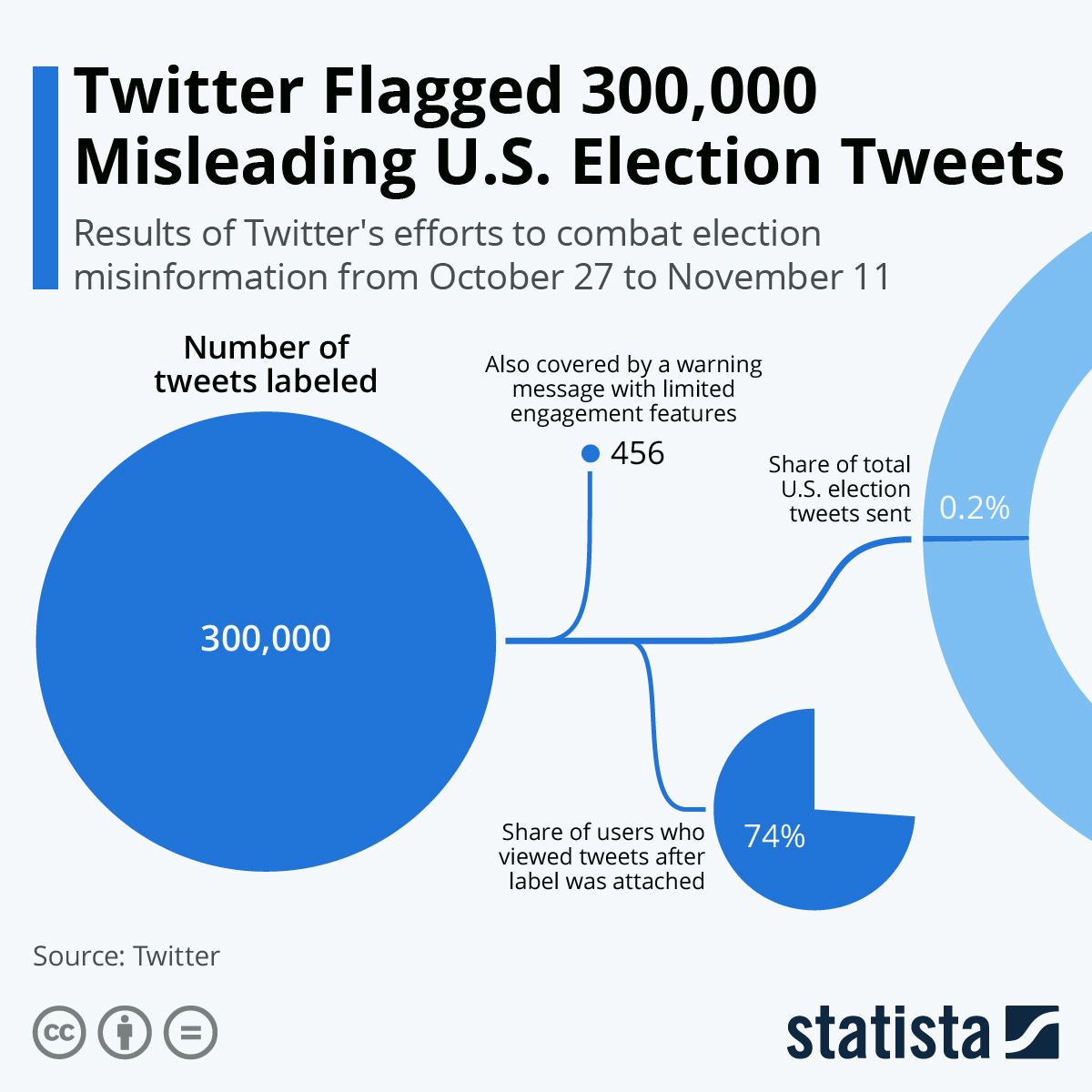

Twitter Flagged 300,000 Misleading U.S. Election Tweets

The New York Times found that

third of president Trump’s tweets labeled as such between Nov 03 to Nov 06

In the wake of the 2016 election, Twitter, Facebook, and other social networks were heavily criticized for failing to clamp down on and limit the spread of disinformation on their platforms. A week after one of the most tumultuous elections in American history, Twitter has now released an overview of its efforts to label misleading tweets about the 2020 election. It stated that it attached a label to 300,000 misleading tweets between October 27 and November 03 under its Civic Integrity Policy, amounting to 0.2 percent of all tweets sent about the election.

456 of those tweets were given a stronger warning that obscured the text and limited user engagement. A separate analysis by The New York Times found that President Trump accounted for a significant chunk of those broader warnings in the period from November 03 to November 06, with a third of his tweets labeled as such.

According to Twitter, 74 percent of people who viewed the 300,000 labeled tweets saw them after a warning message had already been applied. It also observed a 29 percent decrease in quote tweets of labeled tweets due to a warning being in place prior to sharing. Twitter also stated that it moved early to get ahead of potentially misleading information by showing all of its U.S. users a series of messages on their timelines outlining how the election results were likely to be delayed as well as how voting by mail is safe and legitimate.

You will find more infographics at Statista

You will find more infographics at Statista

Read more about: Donald Trump Presidency, Elections in US, facebook, Social Networks, Twitter